INTRODUCTION

In 2023, driven by OpenAI's ChatGPT, generative AI rooted in Large Language Models (LLMs) has risen to global prominence. Functioning through natural language prompts, it adeptly handles a multitude of tasks, from responding to queries and enhancing texts to generating code. The genuine worth of AI shines when applied to real-world challenges. Professionals across various domains are now actively exploring its potential.

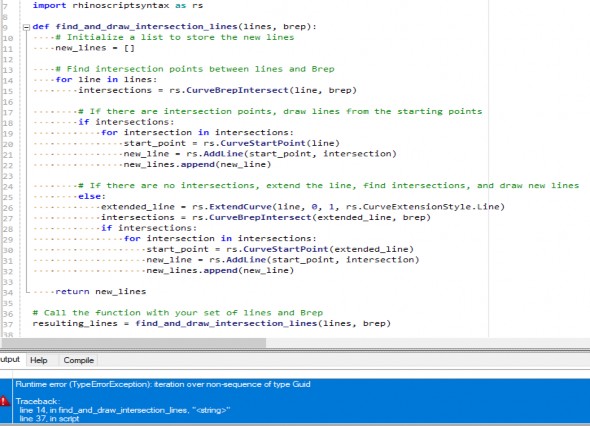

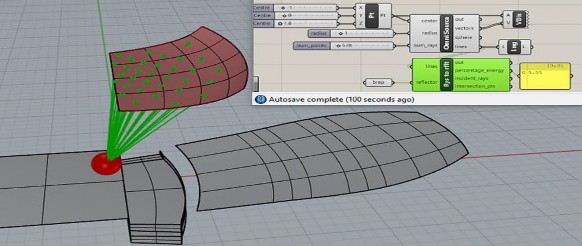

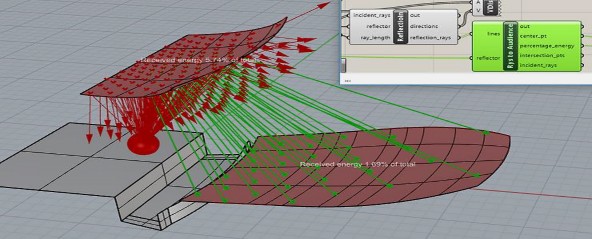

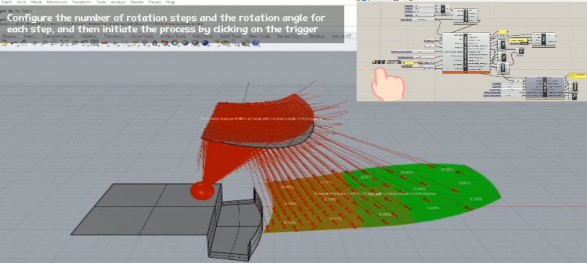

This paper delves into the realm of harnessing generative AI for acoustic design, specifically by integrating its code generation capabilities with existing software. The focus is the synergy of OpenAI's generative pre-trained transformer (GPT) models, with a particular emphasis on ChatGPT, and Grasshopper - a parametric coding plugin within Rhino. A case study is employed to examine the application of OpenAI's GPTs for coding within Grasshopper for sound reflection analysis.

The primary goal is to explore potential of ChatGPT and generative AI in general as a valuable tool for acoustics design and inspire further research and development in this field.

BACKGROUND

Significance of exponential growth in technology

Led by ChatGPT, we are witnessing a remarkable surge of generative AI tools to the public domain in 2023. What once seemed like science fiction is now a reality, and the rapid pace of iterations is astounding. Much information I collected in May for this paper is already outdated.

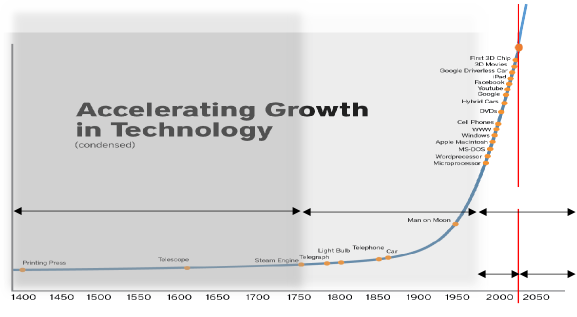

To grasp the significance of this recent progress, it’s essential to take a broader view of the history of technology. In their 2014 bestseller "The Second Machine Age"1, Brynjolfsson and McAfee divided technology history into three phases: the pre-1st machine age, the 1st machine age driven by combustion and electric engines, and the 2nd machine age powered by digital technology. As Figure

1 illustrates, when technology enters a new era, its growth curve steepens. Currently, we find ourselves at an inflection point in the 2nd Machine Age, transitioning from the internet era to the AI era.

Brynjolfsson and McAfee highlighted that the exponential improvement in computational power and digital technology's various components would primarily manifest in AI technologies. Exponential growth's significance cannot be overstated. It is akin to the famous chessboard story in which the inventor requested the king to award him rice by placing a single grain of rice on the first square and doubling the amount on each subsequent square. By the time they reached the halfway point, the required rice exceeded the entire kingdom's resources. Nine years has passed since the book's publication, and we are likely reaching the halfway mark on this metaphorical chessboard.

Vol. 45. Pt. 3. 2023

ChatGPT

Pre- Machine Age

1st Machine Age

Internet AI era era

2023

2nd Machine Age

Figure 1. Accelerating growth in technology (modified from the figure in Komerath’s paper2)

The McKinsey Global Institute3 projects that by 2030, approximately 70% of companies will adopt some form of AI technology, with nearly half of large companies implementing a full range of AI solutions. McKinsey also estimates that AI could contribute an additional $US 13 trillion in economic output by 2030, thereby increasing global GDP by approximately 1.2% annually.

How can AI technology generate value in the field of acoustics, and how can acoustic professionals make the most effective use of AI?

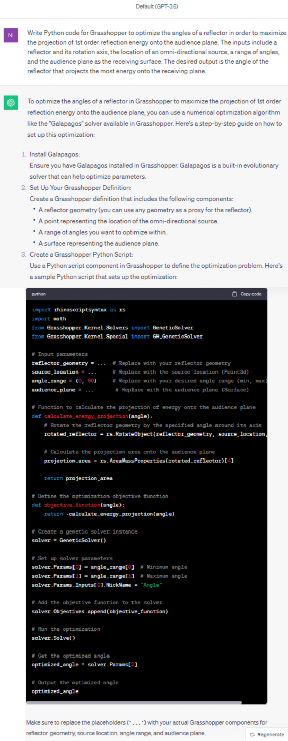

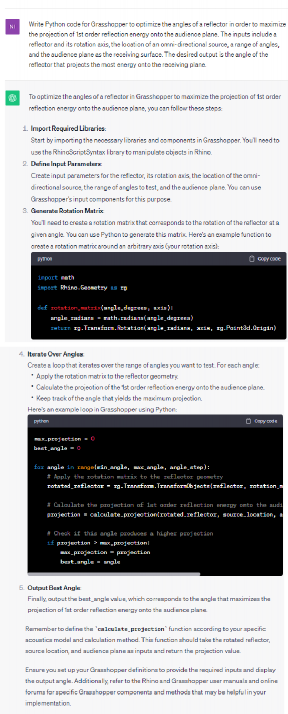

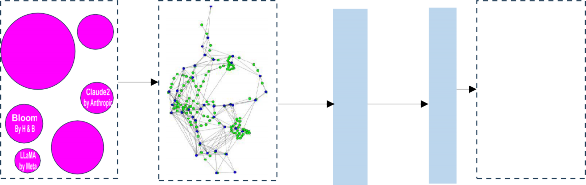

A practical path of harnessing AI for acoustic design: through coding

A practical approach involves leveraging generative AI programming applications based on Large Language Models (LLMs), such as OpenAI’s ChatGPT (specifically GPT-3.5 and GPT-4), GitHub Copilot fine-tuned with GPT-3, Google's Bard powered by PaLM-2, Amazon's CodeWhisperer, Meta's LLaMA, Anthropic's Claude-2, and Hugging Face and Big Science's Bloom. These LLM applications are robust, capable of generating code from natural language prompts, a process known as "prompt engineering." Integrating AI-generated code into existing applications presents a potential efficient pathway to harness AI capabilities, which is the primary focus of this paper.

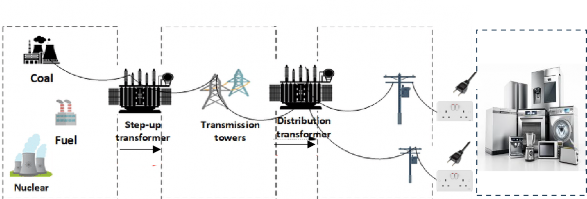

Figure 2 illustrates this process analogously to the distribution of electric power for electrical appliances. LLMs function as power stations, with their parameter size directly reflecting the digital power they can store. This digital power is transmitted via internet infrastructure to reach broad users, then harnessed for code generation through prompt engineering, and subsequently applied to current software applications.

To harness AI generated code, it is crucial for these software applications to include a coding interface, akin to sockets and plugs for appliances to access electricity from power stations. Applications like Rhino with Grasshopper, Revit with Dynamo, and Excel-based add-ins fall into this category, providing a convenient means of harnessing AI-generated code. However, other applications such as Odeon, SoundPlan, and Insul do not possess such a component. To utilise AI power with LLMs, they need either internalise the interface or integrate a coding component. Otherwise, newer applications powered by LLMs may swiftly replace them.

Electric power stations

Transmission

Distribution Interface Applications

Coal

Fuel

Nuclear

Distribution

transformer

Step-up

transformer

Transmission

towers

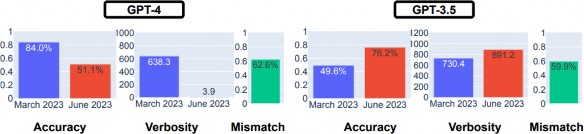

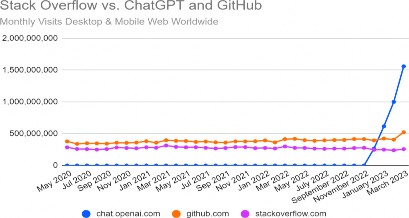

As of the release of this paper, OpenAI's GPT-4 stands out as the uncontested leader in coding abilities4, and ChatGPT earns favour among developers as depicted in Figure 35. Therefore, this paper focuses on the study of OpenAI's GPTs with a particular emphasis on ChatGPT.

Electric power transmission from electric power stations to appliances

Digital power stations – Foundation LLMs

Transmission via internet

LLM Applications for coding

Interface

Fine

tuning

Prompt

engineering

PaLM 2

by Google

Data

by OpenAI (1.7 trillion parameters)

Data GPT-

GPT-4 3.5

Current software applications

Acoustic measurement

Acoustic modelling

and calculations

Acoustic design

Acoustic reporting

ChatGPT, Bard, LLaMA, Github Copilot, CodeWisperer, etc

Customisable coding component

Digital power transmission from LLMs to current applications for acoustic consultancy

Figure 2. An analogue of transmission of digital power to existing applications and electric power to appliances (The sizes of the LLMs are based on Wikipedia6 and Khawaja7)

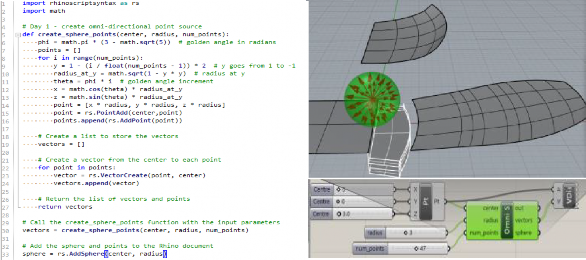

Grasshopper for Rhino

Grasshopper serves as a visual programming language and graphical algorithm editor plugin for Rhino, a widely used 3D computer-aided design software. It incorporates three types of programming nodes that support scripting using Python, C# and Visual Basic respectively, which can act as a bridge to harness the power of generative AI, such as OpenAI’s GPT applications.

While various 3D room acoustic modelling software like Odeon, EASE, and CATT-acoustic are valuable for predicting room acoustic parameters, they often lack the flexibility required to interact with architectural models. This limitation makes them time-consuming for studying design iterations aiming at optimising designs. In contrast, Grasshopper excels in parametric design and can seamlessly integrates with Rhino, allowing users to create models that can be easily adjusted by modifying parameters in real-time within the Rhino model. This feature makes Grasshopper a potent tool for exploring diverse design variations and iterations.

Case study of the synergy of OpenAI’s GPT and Grasshopper